南京大学学报(自然科学版) ›› 2023, Vol. 59 ›› Issue (3): 388–397.doi: 10.13232/j.cnki.jnju.2023.03.003

多样性诱导的潜在嵌入多视图聚类

- 1.江南大学人工智能与计算机学院,无锡,214122

2.江苏省模式识别与计算智能工程实验室(江南大学),无锡,214122

Diversity⁃induced multi⁃view clustering in latent embedded space

Yifan Zhang1,2, Ting Li1,2, Hongwei Ge1,2( )

)

- 1.School of Artificial Intelligence and Computer Science,Jiangnan University,Wuxi,214122,China

2.Jiangsu Provincial Engineering Laboratory of Pattern Recognition and Computational Intelligence,Jiangnan University,Wuxi,214122,China

摘要:

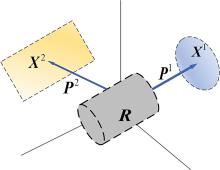

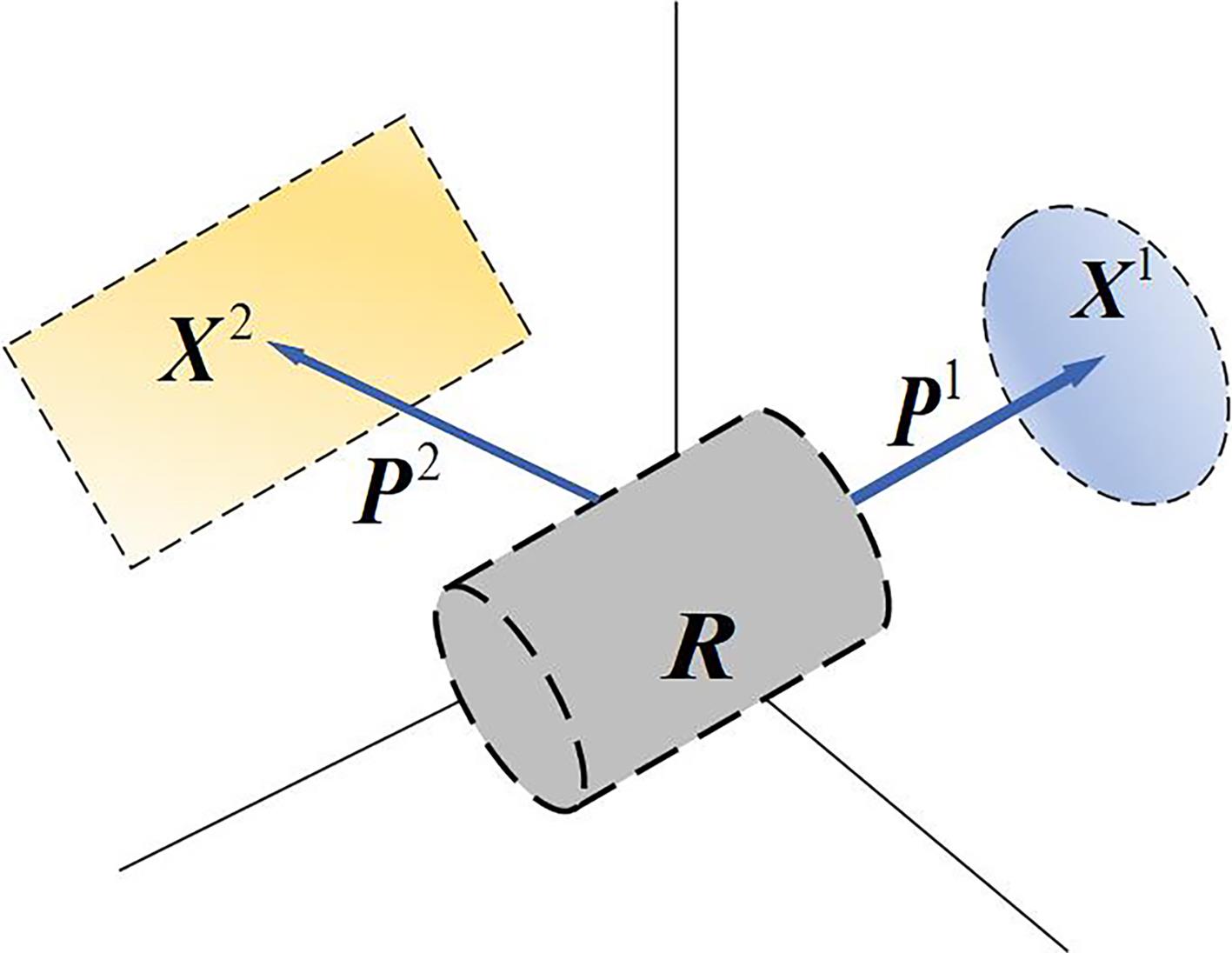

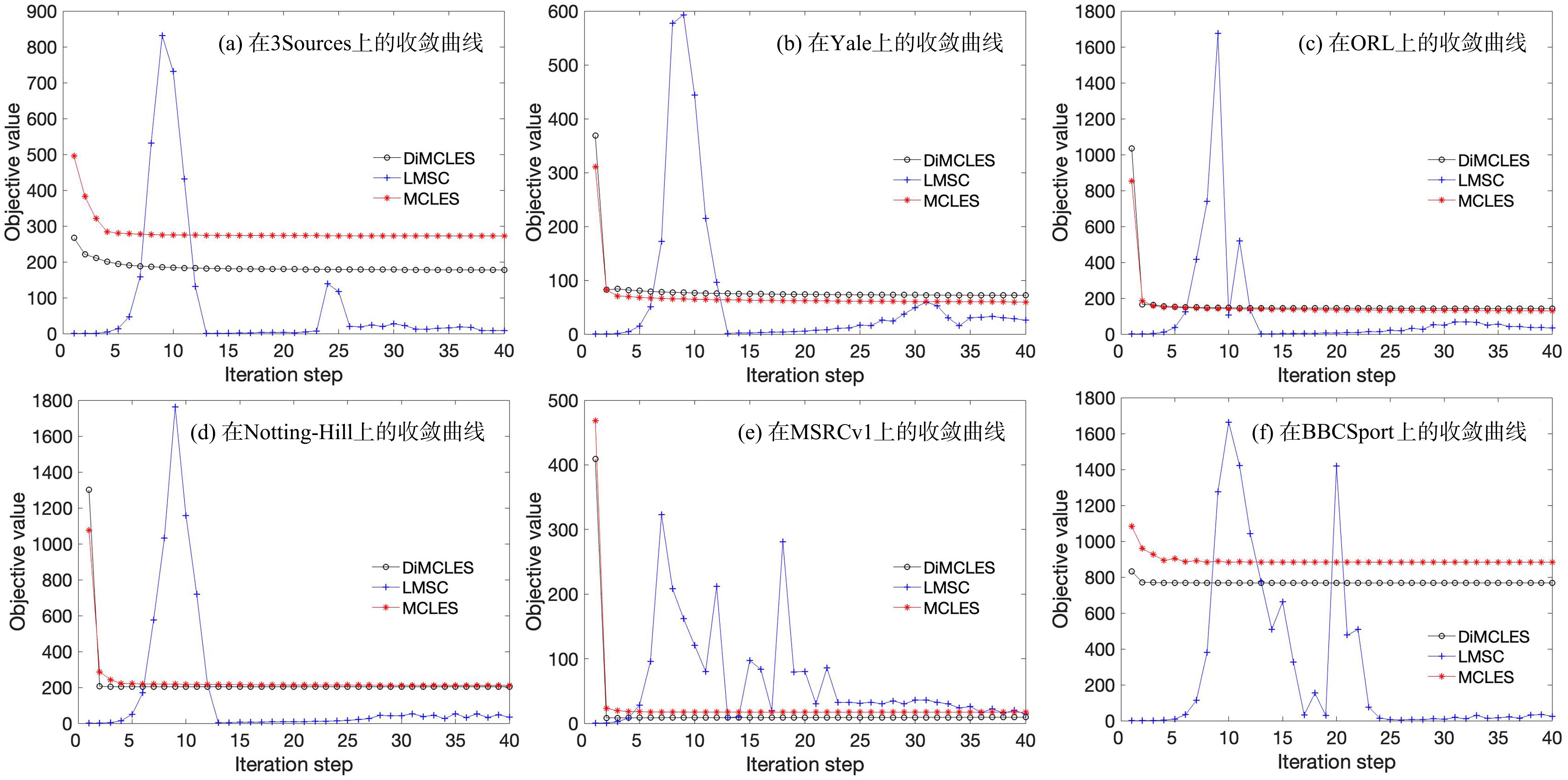

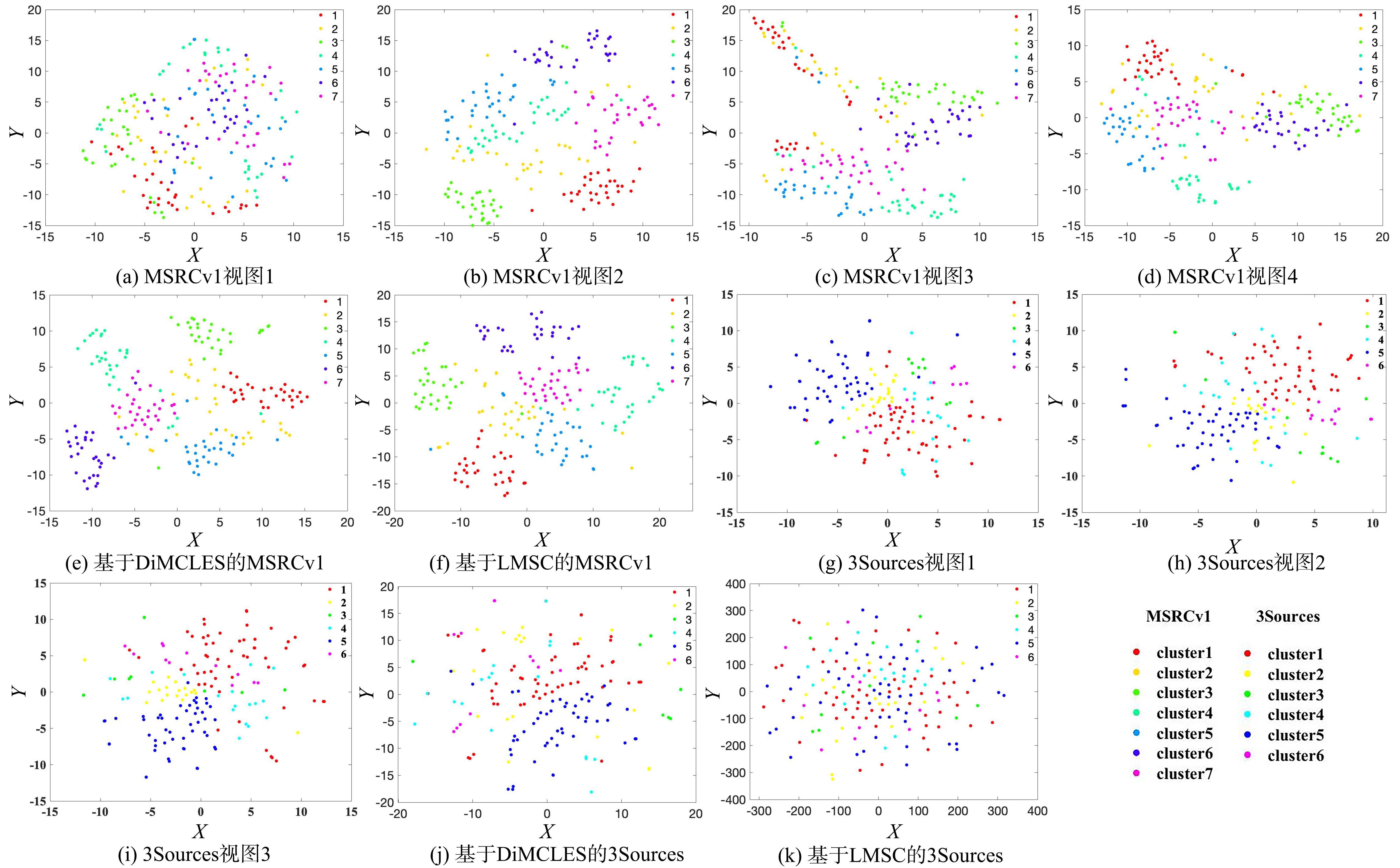

目前,多视图子空间聚类在模式识别和机器学习领域得到了广泛的研究.以前的多视图聚类算法大多将多视图数据划分在其原始特征空间中,其功效在很大程度上隐式地依赖于原始特征呈现的质量.此外,不同视图包含同一对象的特定信息,如何利用这些视图恢复潜在的多样性信息对后续聚类尤其重要.为了解决上述问题,提出一种多样性诱导的潜在嵌入多视图聚类方法,使用特定于视图的投影矩阵从多视图数据中恢复潜在嵌入空间.考虑到多视图数据不同视图之间的多样性信息,采用经验的希尔伯特施密特独立准则约束特定于视图的投影矩阵,将潜在嵌入学习、多样性学习、全局相似性学习和聚类指标学习整合在同一框架中,还设计了一种交替优化方案来有效处理优化问题.在几个真实的多视图数据集上的实验表明,提出的算法具有一定的优势.

中图分类号:

- TP391.41

| 1 | Yang Y, Wang H. Multi?view clustering:A survey. Big Data Mining and Analytics,2018,1(2):83-107. |

| 2 | Nigam K, Ghani R. Analyzing the effectiveness and applicability of co?training∥Proceedings of the 9th International Conference on Information and Knowledge Management. McLean,VA,USA:ACM,2000:86-93. |

| 3 | Kumar A, Rai P, Daumé H. Co?regularized multi?view spectral clustering∥Proceedings of the 24th International Conference on Information Processing Systems. Granada,Spain:Curran Associates Inc.,2011:1413-1421. |

| 4 | Huang S D, Kang Z, Tsang I W,et al. Auto?weighted multi?view clustering via kernelized graph learning. Pattern Recognition,2019(88):174-184. |

| 5 | Liu J, Cao F Y, Gao X Z,et al. A cluster?weighted kernel k?means method for multi?view clustering∥Proceedings of the 34th AAAI Conference on Artificial Intelligence. Palo Alto,CA,USA:AAAI Press,2020:4860-4867. |

| 6 | Zhan K, Zhang C Q, Guan J P,et al. Graph learning for multiview clustering. IEEE Transactions on Cybernetics,2018,48(10):2887-2895. |

| 7 | Wang X B, Guo X J, Lei Z,et al. Exclusivity?consistency regularized multi?view subspace clustering∥Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu,HI,USA:IEEE,2017:1-9. |

| 8 | Zhang X Q, Wang J, Xue X Q,et al. Confidence level auto?weighting robust multi?view subspace clustering. Neurocomputing,2022(475):38-52. |

| 9 | Zhang C Q, Hu Q H, Fu H Z,et al. Latent multi?view subspace clustering∥Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu,HI,USA:IEEE,2017:4333-4341. |

| 10 | Huang B F, Yuan H L, Lai L L. Latent shared representation for multi?view subspace clustering∥2021 International Joint Conference on Neural Networks. Shenzhen,China:IEEE,2021:1-8. |

| 11 | Chen M S, Huang L, Wang C D,et al. Relaxed multi?view clustering in latent embedding space. Information Fusion,2021(68):8-21. |

| 12 | Chen M S, Huang L, Wang C D,et al. Multi?view clustering in latent embedding space∥Proceedings of the 34th AAAI Conference on Artificial Intelligence. Palo Alto,CA,USA:AAAI Press,2020:3513-3520. |

| 13 | Xia S Y, Peng D W, Meng D Y,et al. Ball k?Means:A fast adaptive clustering with no bounds. IEEE Transactions on Pattern Analysis and Machine Intelligence,2022,44(1):87-99. |

| 14 | Cao X C, Zhang C Q, Fu H Z,et al. Diversity?induced multi?view subspace clustering∥Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition. Boston,MA,USA:IEEE,2015:586-594. |

| 15 | Kang Z, Peng C, Cheng Q. Twin learning for similarity and clustering:A unified kernel approach∥Proceedings of the 31st AAAI Conference on Artificial Intelligence. San Francisco,CA,USA:AAAI Press,2017:2080-2086. |

| 16 | Kumar A, Daume III H. A co?training approach for multi?view spectral clustering∥Proceedings of the 28th International Conference on International Conference on Machine Learning. Bellevue,WA,USA:Omnipress,2011:393-400. |

| 17 | DE SA V R. Spectral clustering with two views∥2005 ICML Workshop on Learning with Multiple Views. New York,USA:ACM,2005:20-27. |

| 18 | Xia R K, Pan Y, Du L,et al. Robust multi?view spectral clustering via low?rank and sparse decomposition∥Proceedings of the 28th AAAI Conference on Artificial Intelligence. Québec City,Canada:AAAI Press,2014:2149-2155. |

| [1] | 卢桂馥, 汤荣, 姚亮. 双重结构的最小二乘回归子空间聚类算法[J]. 南京大学学报(自然科学版), 2022, 58(6): 1050-1058. |

| [2] | 韩迪, 陈怡君, 廖凯, 林坤玲. 推荐系统中的准确性、新颖性和多样性的有效耦合与应用[J]. 南京大学学报(自然科学版), 2022, 58(4): 604-614. |

| [3] | 夏菁, 丁世飞. 基于低秩稀疏约束的自权重多视角子空间聚类[J]. 南京大学学报(自然科学版), 2020, 56(6): 862-869. |

| [4] | 王丽娟,丁世飞,丁玲. 基于迁移学习的软子空间聚类算法[J]. 南京大学学报(自然科学版), 2020, 56(4): 515-523. |

| [5] | 洪佳明,黄云,刘少鹏,印鉴. 具有结果多样性的近似子图查询算法[J]. 南京大学学报(自然科学版), 2019, 55(6): 960-972. |

| [6] | 帅 惠, 袁晓彤, 刘青山. 基于L0约束的稀疏子空间聚类[J]. 南京大学学报(自然科学版), 2018, 54(1): 23-. |

| [7] | 严丽宇1,魏 巍1,2*,郭鑫垚1,崔军彪1. 一种基于带核随机子空间的聚类集成算法[J]. 南京大学学报(自然科学版), 2017, 53(6): 1033-. |

| [8] | 李兴亮1,毛 睿2*. 基于近期最远遍历的支撑点选择[J]. 南京大学学报(自然科学版), 2017, 53(3): 483-. |

| [9] | 万晨洁,余益军,张 莉,张晓辉,刘红玲*,于红霞. 太湖有机污染物的生态风险研究[J]. 南京大学学报(自然科学版), 2017, 53(2): 256-. |

| [10] | 韩永和,贾梦茹,傅景威,向 萍,史孝霞,崔昕毅,罗 军,陈焱山*. 不同浓度砷酸盐胁迫对蜈蚣草根际微生物群落功能多样性特征的影响[J]. 南京大学学报(自然科学版), 2017, 53(2): 275-. |

| [11] | 屈伟洋, 俞 扬. 多样性正则的神经网络训练方法探索[J]. 南京大学学报(自然科学版), 2017, 53(2): 340-. |

| [12] | 相景昌1,2,陈 爽1*,余 成1,3,李广宇1,3. 南黄海辐射沙脊群生物多样性非使用价值评估[J]. 南京大学学报(自然科学版), 2014, 50(5): 723-732. |

| [13] | 左 平1,2*,欧志吉1,姜启吴1,刘 明3. 江苏盐城原生滨海湿地土壤中的微生物群落功能多样性分析[J]. 南京大学学报(自然科学版), 2014, 50(5): 715-722. |

| [14] | 于雯雯,刘培廷*,张朝晖,张 虎,高继先,吴福权,许程林,贲成恺,袁健美. 南黄海辐射沙脊群浮游动物群落结构及季节变化[J]. 南京大学学报(自然科学版), 2014, 50(5): 706-714. |

| [15] | 刘 波1, 王红军1*,成 聪2,杨 燕1. 基于属性最大间隔的子空间聚类[J]. 南京大学学报(自然科学版), 2014, 50(4): 482-. |

|

||