南京大学学报(自然科学版) ›› 2020, Vol. 56 ›› Issue (1): 41–50.doi: 10.13232/j.cnki.jnju.2020.01.005

基于改进蝗虫优化算法的特征选择方法

- 1. 贵州大学大数据与信息工程学院,贵阳,550025

2. 贵州省公共大数据重点实验室,贵州大学,贵阳,550025

An feature selection method based on improved grasshopper optimization algorithm

- 1. College of Big Data and Information Engineering,Guizhou University,Guiyang,550025,China

2. Guizhou Provincial Key Laboratory of Public Big Data,Guizhou University,Guiyang,550025,China

摘要:

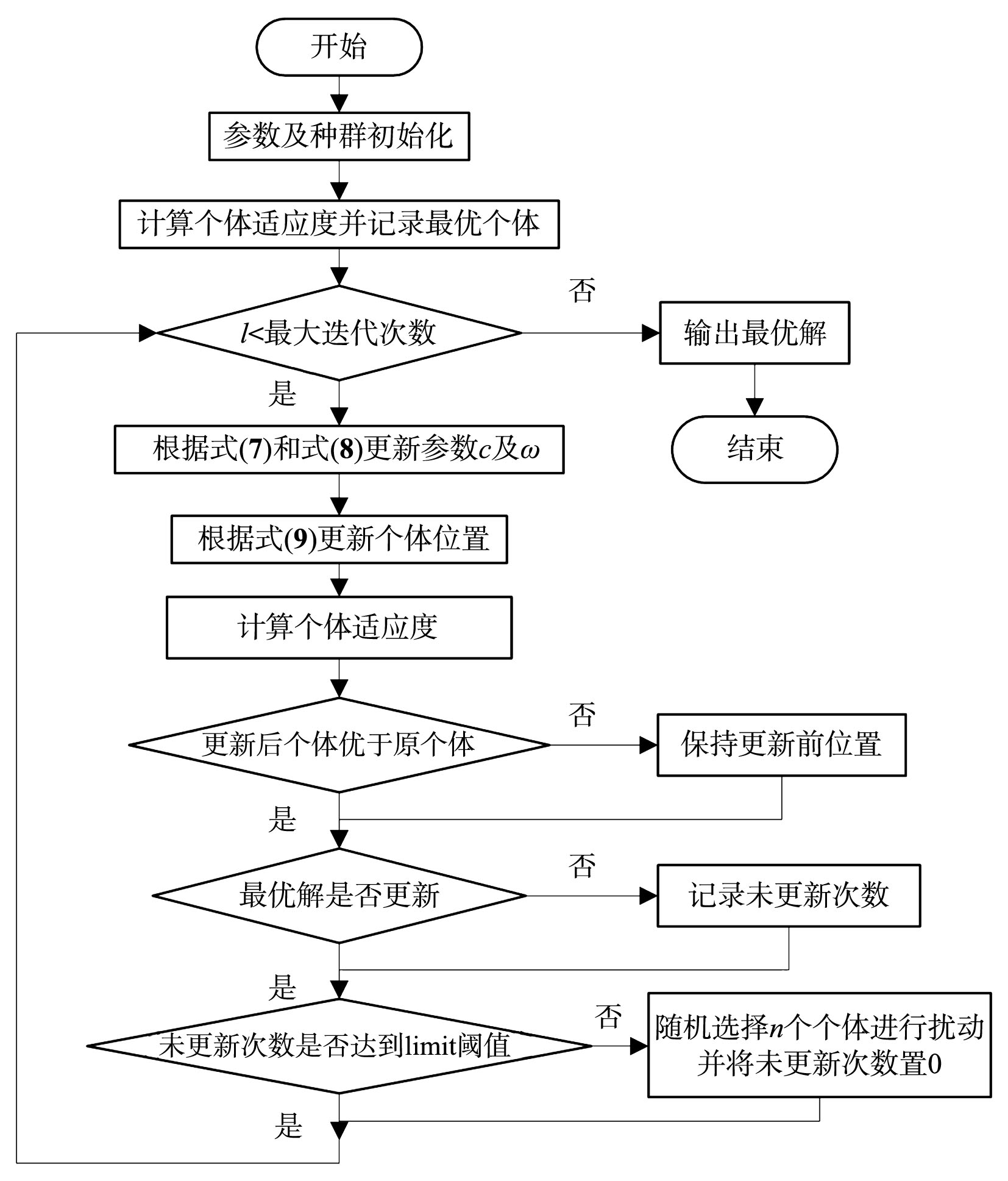

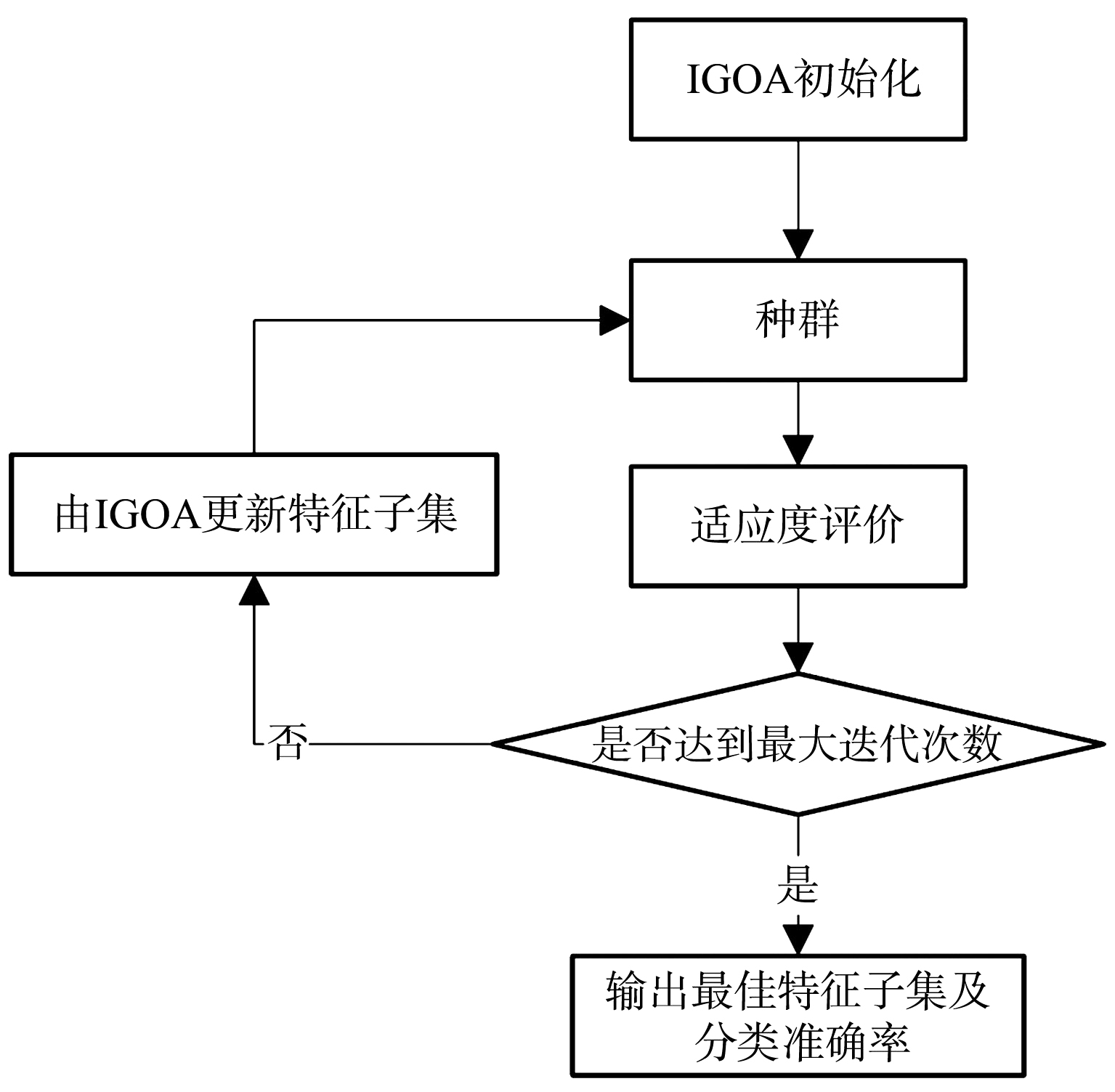

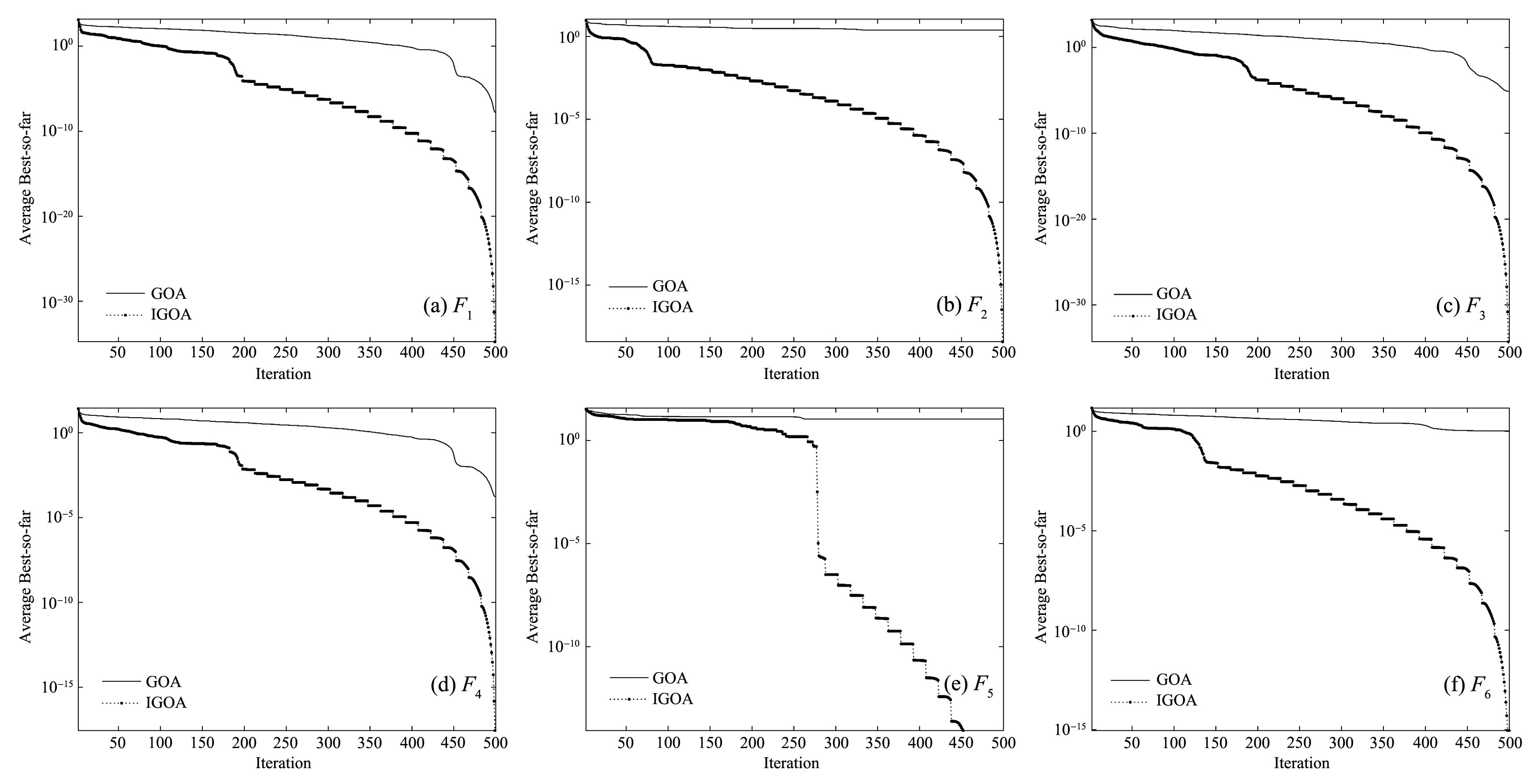

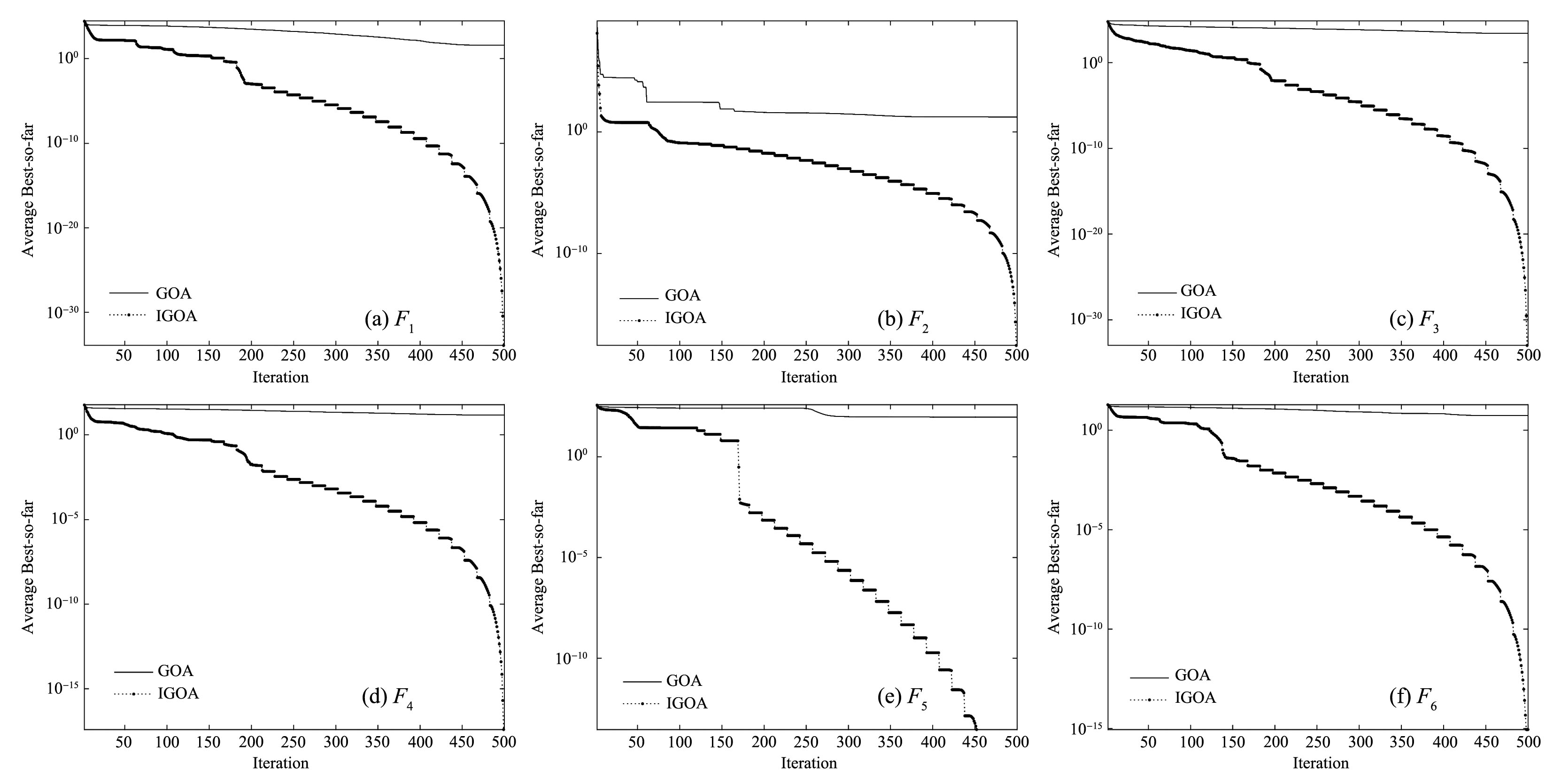

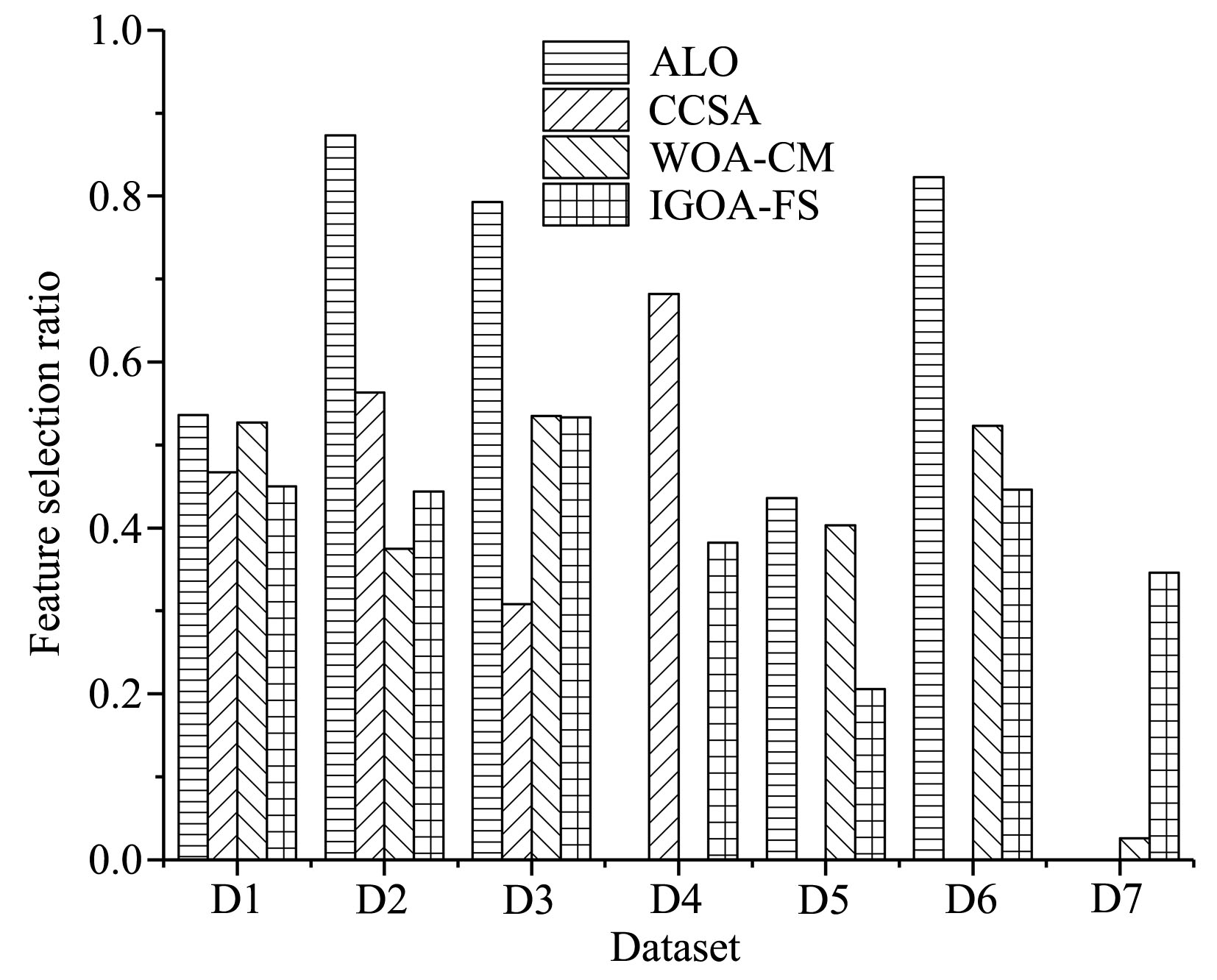

针对传统蝗虫优化算法寻优精度低和收敛速度慢的问题,提出一种基于非线性调整策略的改进蝗虫优化算法.首先,利用非线性参数代替传统蝗虫算法中的递减系数,协调算法全局探索和局部开发能力,加快算法收敛速度;其次,引入自适应权重系数改变蝗虫位置更新方式,提高算法寻优精度;然后,结合limit阈值思想,利用非线性参数对种群中部分个体进行扰动,避免算法陷入局部最优.通过六个基准测试函数的仿真结果表明,改进算法的收敛速度和寻优精度均有明显提高.最后将改进算法应用于特征选择问题中,通过在七个数据集上的实验结果表明,基于改进算法的特征选择方法能够有效地进行特征选择,提高分类准确率.

中图分类号:

- TP301.6

| 1 | 李炜,巢秀琴. 改进的粒子群算法优化的特征选择方法. 计算机科学与探索,2019,13(6):990-1004. |

| Li W,Chao X Q.Improved particle swarm optimization method for feature selection. Journal of Frontiers of Computer Science and Technology,2019,13(6):990-1004. | |

| 2 | 张震,魏鹏,李玉峰等. 改进粒子群联合禁忌搜索的特征选择算法. 通信学报,2018,39(12):60-68. |

| Zhang Z,Wei P,Li Y F,et al. Feature selection algorithm based on improved particle swarm joint taboo search. Journal on Communications,2018,39(12):60-68. | |

| 3 | Gao W F,Hu L,Zhang P,et al. Feature selection by integrating two groups of feature evaluation criteria. Expert Systems with Applications,2018,110:11-19. |

| 4 | Mafarja M M,Mirjalili S. Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing,2017,260:302-312. |

| 5 | Kennedy J,Eberhart R. Particle swarm optimization∥Proceedings of ICNN'95?International Conference on Neural Networks. Perth,Australia:IEEE,1995:1942-1948. |

| 6 | Mirjalili S. The ant lion optimizer. Advances in Engineering Software,2015,83:80-98. |

| 7 | Mirjalili S,Lewis A. The whale optimization algorithm. Advances in Engineering Software,2016,95:51-67. |

| 8 | Saremi S,Mirjalili S,Lewis A. Grasshopper optimisation algorithm:theory and application. Advances in Engineering Software,2017,105:30-47. |

| 9 | Ewees A A,Elaziz M A,Houssein E H. Improved grasshopper optimization algorithm using opposition?based learning. Expert Systems with Applications,2018,112:156-172. |

| 10 | Luo J,Chen H L,Zhang Q,et al. An improved grasshopper optimization algorithm with application to financial stress prediction. Applied Mathematical Modelling,2018,64:654-668. |

| 11 |

Arora S,Anand P. Chaotic grasshopper optimization algorithm for global optimization. Neural Computing and Applications,2019,doi:10.1007/s00521?018?3343?2.

doi: 10.1007/s00521?018?3343?2 |

| 12 |

李洋州,顾磊. 一种基于曲线自适应和模拟退火的蝗虫优化算法. 计算机应用研究,2019,doi:10.19734/j.issn.1001?3695.2018.07. 0580. (Li Y Z,Gu L. Grasshopper optimization algorithm based on curve adaptive and simulated annealing. Application Research of Computers,2019,doi:10.19734/j.issn.1001?3695. 2018.07.0580.

doi: 10.19734/j.issn.1001?3695. 2018.07.0580 |

| 13 | 杨菊蜻,张达敏,何锐亮等. 基于Powell搜索的混沌鸡群优化算法. 微电子学与计算机,2018,35(7):78-82. |

| Yang J Q,Zhang D M,He R L,et al. A chaotic chicken optimization algorithm based on powell search. Microelectronics & Computer,2018,35(7):78-82. | |

| 14 | Mafarja M,Mirjalili S. Whale optimization approaches for wrapper feature selection. Applied Soft Computing,2018,62:441-453. |

| 15 | Emary E,Zawbaa H M,Parv B. Feature selection based on antlion optimization algorithm∥2015 3rd World Conference on Complex Systems (WCCS). Marrakech,Morocco:IEEE,2015:1-7. |

| 16 | Sayed G I,Hassanien A E,Azar A T. Feature selection via a novel chaotic crow search algorithm. Neural Computing and Applications,2019,31(1):171-188. |

| 17 | Yu L,Liu H. Feature selection for high?dimensional data:a fast correlation?based filter solution∥Proceedings of the 20th International Conference on Machine Learning. Washington DC,USA:AAAI Press,2003:856-863. |

| [1] | 程玉胜,陈飞,庞淑芳. 标记倾向性的粗糙互信息k特征核选择[J]. 南京大学学报(自然科学版), 2020, 56(1): 19-29. |

| [2] | 刘 素, 刘惊雷. 基于特征选择的CP-nets结构学习[J]. 南京大学学报(自然科学版), 2019, 55(1): 14-28. |

| [3] | 陈海娟,冯 翔,虞慧群. 基于预测算子的GSO特征选择算法[J]. 南京大学学报(自然科学版), 2018, 54(6): 1206-1215. |

| [4] | 温 欣1,李德玉1,2*,王素格1,2. 一种基于邻域关系和模糊决策的特征选择方法[J]. 南京大学学报(自然科学版), 2018, 54(4): 733-. |

| [5] | 靳义林1,2*,胡 峰1,2. 基于三支决策的中文文本分类算法研究[J]. 南京大学学报(自然科学版), 2018, 54(4): 794-. |

| [6] | 董利梅,赵 红*,杨文元. 基于稀疏聚类的无监督特征选择[J]. 南京大学学报(自然科学版), 2018, 54(1): 107-. |

| [7] | 崔 晨,邓赵红*,王士同. 面向单调分类的简洁单调TSK模糊系统[J]. 南京大学学报(自然科学版), 2018, 54(1): 124-. |

| [8] | 李 婵,杨文元*,赵 红. 联合依赖最大化与稀疏表示的无监督特征选择方法[J]. 南京大学学报(自然科学版), 2017, 53(4): 775-. |

| [9] | 姚 晟1,2*,徐 风1,2,赵 鹏1,2,刘政怡1,2,陈 菊1,2. 基于改进邻域粒的模糊熵特征选择算法[J]. 南京大学学报(自然科学版), 2017, 53(4): 802-. |

| [10] | 蔡亚萍,杨 明* . 一种利用局部标记相关性的多标记特征选择算法[J]. 南京大学学报(自然科学版), 2016, 52(4): 693-. |

| [11] | 谢娟英*,屈亚楠,王明钊 . 基于密度峰值的无监督特征选择算法[J]. 南京大学学报(自然科学版), 2016, 52(4): 735-. |

| [12] | 珠 杰1,2*,李天瑞1,刘胜久1. 基于条件随机场的藏文人名识别技术研究[J]. 南京大学学报(自然科学版), 2016, 52(2): 289-. |

| [13] | 胡学钢*,许尧,李培培,张玉红. 一种过滤式多标签特征选择算法[J]. 南京大学学报(自然科学版), 2015, 51(4): 723-730. |

| [14] | 周国静,李 云*. 基于最小最大策略的集成特征选择[J]. 南京大学学报(自然科学版), 2014, 50(4): 457-. |

| [15] | 季薇1,李云2** . 基于局部能量的集成特征选择*[J]. 南京大学学报(自然科学版), 2012, 48(4): 499-503. |

|