南京大学学报(自然科学版) ›› 2022, Vol. 58 ›› Issue (2): 286–297.doi: 10.13232/j.cnki.jnju.2022.02.012

• • 上一篇

基于BERT模型的无监督候选词生成及排序算法

- 1.江南大学人工智能与计算机学院, 无锡, 214122

2.江苏省媒体设计与软件技术重点实验室, 江南大学人工智能与计算机学院, 无锡, 214122

An unsupervised substitution generation and ranking algorithm based on BERT model

- 1.School of Artificial Intelligence and Computer Science,Jiangnan University,Wuxi,214122,China

2.Jiangsu Key Laboratory of Media Design and Software Technology,School of Artificial Intelligence and Computer Science,Jiangnan University,Wuxi,214122,China

摘要:

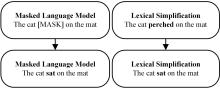

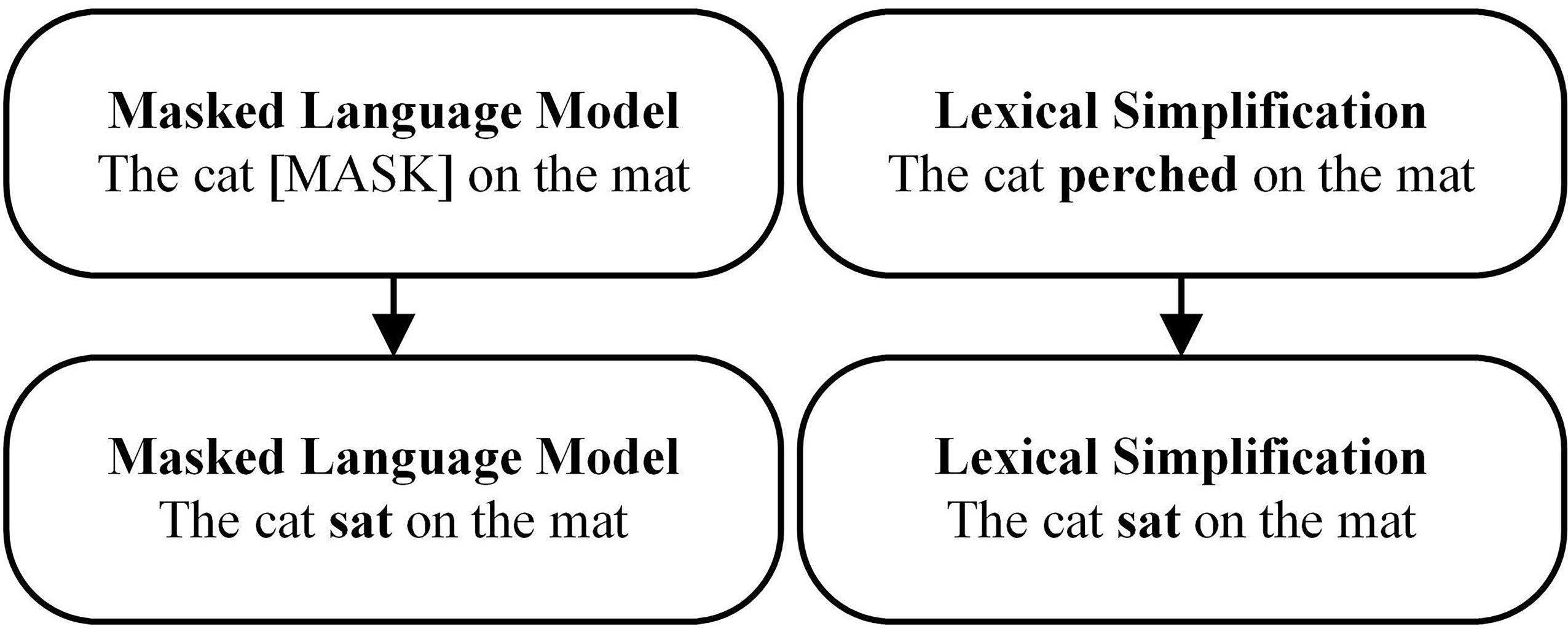

词汇简化的目的是在保持句子原始语义的前提下用更易于理解的简单词替代复杂词,同时使语句保持流畅.传统方法依赖人工标记的数据集或者只关注复杂词本身而未能有效地关注复杂词的上下文,导致生成的候选词不符合上下文语境.为了解决上述两个问题,提出一种基于BERT(Bidirectional Encoder Representations from Transformers)模型的无监督候选词生成及排序算法Pretrained?LS,还同时考虑了复杂词和上下文.在候选词生成阶段,Pretrained?LS利用BERT模型生成候选词;在候选词排序阶段,除了常见的词频和BERT预测顺序排序特征,Pretrained?LS提出BERT词嵌入表示语义相似度、基于Roberta(A Robustly Optimized BERT Pretraining Approach)向量的上下文相似度以及常见词复杂分数字典三个排序特征.实验中,在候选词生成阶段,Pretrained?LS采用广泛使用的精确率P、召回率R以及两者的调和平均值F作为评价标准,在候选词排序阶段同样采用精确率P以及准确率A作为评价标准.在三个英语基准数据集上的实验结果表明,与目前表现最好的词汇简化算法相比,在候选词生成阶段,Pretrained?LS的评测指标F值提升5.70%;在候选词排序阶段,准确率A提升7.21%.

中图分类号:

- TP391

| 1 | Belder J D, Moens M F. Text simplification for children∥Proceedings of the SIGIR Workshop on Accessible Search Systems. Geneva,Switzerland:ACM,2010:19-26. |

| 2 | Devlin S, Tait J. The use of a psycholinguistic database in the simplification of text for aphasic readers∥Proceedings of the Conference on Linguistic Databases. Groningen,Netherlands:CSLI,1998(1):161-173. |

| 3 | Biran O, Brody S, Elhadad N. Putting it simply:A context?aware approach to lexical simplification∥Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics:Human Language Technologies. Portland,OR,USA:ACL,2011:496-501. |

| 4 | Sutskever I, Vinyals O, Le Q V. Sequence to sequence learning with neural networks∥Proceedings of the 27th International Conference on Neural Information Processing Systems. Cambridge,MA,USA:ACM,2014:3104-3112. |

| 5 | Paetzold G, Specia L. Lexical simplification with neural ranking∥Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics. Valencia,Spain:ACL,2017:34-40. |

| 6 | Paetzold G H, Specia L. A survey on lexical simplification. Journal of Artificial Intelligence Research,2017(60):549-593. |

| 7 | 强继朋,钱镇宇,李云,等. 基于预训练表示模型的英语词语简化方法. 自动化学报,2021,doi:10.16383/j.aas.c200723 . |

| Qiang J P, Li Y, Zhu Y,et al. English lexical simplification based on pretrained language representation modeling. Acta Automatica Sinica,2021,doi:10.16383/j.aas.c200723 . | |

| 8 | Pavlick E, Burch C C. Simple PPDB:A paraphrase database for simplification∥Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics. Berlin,Germany:ACL,2016:143-148. |

| 9 | Yuret D. Ku:Word sense disambiguation by substitution∥Proceedings of the 4th International Workshop on Semantic Evaluations. Prague,Czech Republic:ACL,2007:207-214. |

| 10 | Bautista S, León C, Hervás R,et al. Empirical identification of text simplification strategies for reading?impaired people∥European Conference for the Advancement of Assistive Technology. Netherlands:IOS Press,2011:567-574. |

| 11 | Yatskar M, Pang B, Danescu?Niculescu?Mizil C,et al. For the sake of simplicity:unsupervised extraction of lexical simplifications from Wikipedia∥Human Language Technologies:2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics. Los Angeles,CA,USA:ACL,2010:365-368. |

| 12 | Horn C, Manduca C, Kauchak D. Learning a lexical simplifier using Wikipedia∥Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics. Baltimore,MA,USA:ACL,2014:458-463. |

| 13 | Glava? G, ?tajner S. Simplifying lexical simplification:Do we need simplified corpora?∥Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing. Beijing,China:ACL,2015:63-68. |

| 14 | Paetzold G, Specia L. Unsupervised lexical simplification for non?native speakers∥Proceedings of the 30th AAAI Conference on Artificial Intelligence. Phoenix,CA,USA:AAAI Press,2016:3761-3767. |

| 15 | Qiang J P, Li Y, Zhu Y,et al. Lexical simplification with pretrained encoders∥Proceedings of the AAAI Conference on Artificial Intelligence. New York,NY,USA:AAAI Press,2020:8649-8656. |

| 16 | Zhou W C S, Ge T, Xu K,et al. BERT?based lexical substitution∥Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Florence,Italy:ACL,2019:3368-3373. |

| 17 | Maddela M, Xu W. A word?complexity lexicon and a neural readability ranking model for lexical simplification∥Proceedings of 2018 Conference on Empirical Methods in Natural Language Processing. Brussels,Belgium:ACL,2018:3749-3760. |

| 18 | Devlin J, Chang M W, Lee K,et al. Bert:Pre?training of deep bidirectional transformers for language understanding∥Proceedings of 2019 Conference of the North American Chapter of the Association for Computational Linguistics:Human Language Technologies. Minneapolis,MN,USA:ACL,2019:4171-4186. |

| 19 | Zhu Y K, Kiros R, Zemel R,et al. Aligning books and movies:Towards story?like visual explanations by watching movies and reading books∥Proceedings of 2015 IEEE International Conference on Computer vision. Santiago,Chile:IEEE,2015:19-27. |

| 20 | Kajiwara T, Matsumoto H, Yamamoto K. Selecting proper lexical paraphrase for children∥Proceedings of the 25th Conference on Computational Linguistics and Speech Processing. Kaohsiung,Taiwan,China:ACLCLP,2013:59-73. |

| 21 | Pennington J, Socher R, Manning C. GloVe:Global vectors for word representation∥Proceedings of 2014 Conference on Empirical Methods in Natural Language Processing. Doha,Qatar:ACL,2014:1532-1543. |

| 22 | Gooding S, Kochmar E. Recursive context?aware lexical simplification∥Proceedings of 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing. Hong Kong,China:ACL,2019:4853-4863. |

| 23 | Peters M E, Neumann M, Iyyer M,et al. Deep contextualized word representations∥Proceedings of 2018 Conference of the North American Chapter of the Association for Computational Linguistics:Human Language Technologies. New Orleans,LA,USA:ACL,2018:2227-2237. |

| 24 | Lauscher A, Vuli? I, Ponti E M,et al. Specializing unsupervised pretraining models for word?level semantic similarity∥Proceedings of the 28th International Conference on Computational Linguistics. Barcelona,Spain:ICCL,2020:1371-1383. |

| 25 | Liu Y H, Ott M, Goyal N,et al. RoBERTa:A robustly optimized BERT pretraining approach. 2019,arXiv:. |

| 26 | Chi P H, Chung P H, Wu T H,et al. Audio albert:A lite BERT for self?supervised learning of audio representation∥2021 IEEE Spoken Language Technology Workshop. Shanghai,China:IEEE,2021:344-350. |

| [1] | 陈黎, 龚安民, 丁鹏, 伏云发. 基于欧式空间⁃加权逻辑回归迁移学习的运动想象EEG信号解码[J]. 南京大学学报(自然科学版), 2022, 58(2): 264-274. |

| [2] | 蒋伟进, 孙永霞, 朱昊冉, 陈萍萍, 张婉清, 陈君鹏. 边云协同计算下基于ST⁃GCN的监控视频行为识别机制[J]. 南京大学学报(自然科学版), 2022, 58(1): 163-174. |

| [3] | 吴礼福, 姬广慎, 胡秋岑. 强混响环境下基于K⁃medoids特征聚类的话者计数[J]. 南京大学学报(自然科学版), 2021, 57(5): 875-880. |

| [4] | 刘玲珊, 熊轲, 张煜, 张锐晨, 樊平毅. 信息年龄受限下最小化无人机辅助无线供能网络的能耗:一种基于DQN的方法[J]. 南京大学学报(自然科学版), 2021, 57(5): 847-856. |

| [5] | 王军, 申政文, 李玉莲, 潘在宇. 分离表示学习下的严重缺失静脉信息高质量生成[J]. 南京大学学报(自然科学版), 2021, 57(5): 810-817. |

| [6] | 张礼, 王嘉瑞. 基于图同构网络的自闭症功能磁共振影像诊断算法[J]. 南京大学学报(自然科学版), 2021, 57(5): 801-809. |

| [7] | 张强, 杨吉斌, 张雄伟, 曹铁勇, 梅鹏程. 基于生成对抗网络的音频目标分类对抗[J]. 南京大学学报(自然科学版), 2021, 57(5): 793-800. |

| [8] | 李娜, 段友祥, 孙歧峰, 沈楠. 一种基于样本点距离突变的聚类方法[J]. 南京大学学报(自然科学版), 2021, 57(5): 775-784. |

| [9] | 倪斌, 陆晓蕾, 童逸琦, 马涛, 曾志贤. 胶囊神经网络在期刊文本分类中的应用[J]. 南京大学学报(自然科学版), 2021, 57(5): 750-756. |

| [10] | 陈磊, 孙权森, 王凡海. 基于深度对抗网络和局部模糊探测的目标运动去模糊[J]. 南京大学学报(自然科学版), 2021, 57(5): 735-749. |

| [11] | 卢锦亮, 吴广潮, 冯夫健, 王林. 基于联合轨迹特征的徘徊行为识别方法[J]. 南京大学学报(自然科学版), 2021, 57(5): 724-734. |

| [12] | 薛峰, 李凡, 李爽, 李华锋. 基于域分离和对抗学习的跨域行人重识别[J]. 南京大学学报(自然科学版), 2021, 57(5): 715-723. |

| [13] | 贾霄, 郭顺心, 赵红. 基于图像属性的零样本分类方法综述[J]. 南京大学学报(自然科学版), 2021, 57(4): 531-543. |

| [14] | 吴礼福, 徐行. 融合韵律与动态倒谱特征的语音疲劳度检测[J]. 南京大学学报(自然科学版), 2021, 57(4): 709-714. |

| [15] | 颜志良, 丰智鹏, 刘丹, 王会青. 一种混合深度神经网络的赖氨酸乙酰化位点预测方法[J]. 南京大学学报(自然科学版), 2021, 57(4): 627-640. |

|

||