南京大学学报(自然科学版) ›› 2022, Vol. 58 ›› Issue (2): 298–308.doi: 10.13232/j.cnki.jnju.2022.02.013

• • 上一篇

融合全局和局部特征的光场图像空间超分辨率算法

- 江南大学人工智能与计算机学院,无锡,214122

A spatial super⁃resolution method for light filed images by fusing global and local features

Huahua Jing, Tao Yan( ), Yuan Liu

), Yuan Liu

- School of Artificial Intelligence and Computer Science,Jiangnan University, Wuxi,214122, China

摘要:

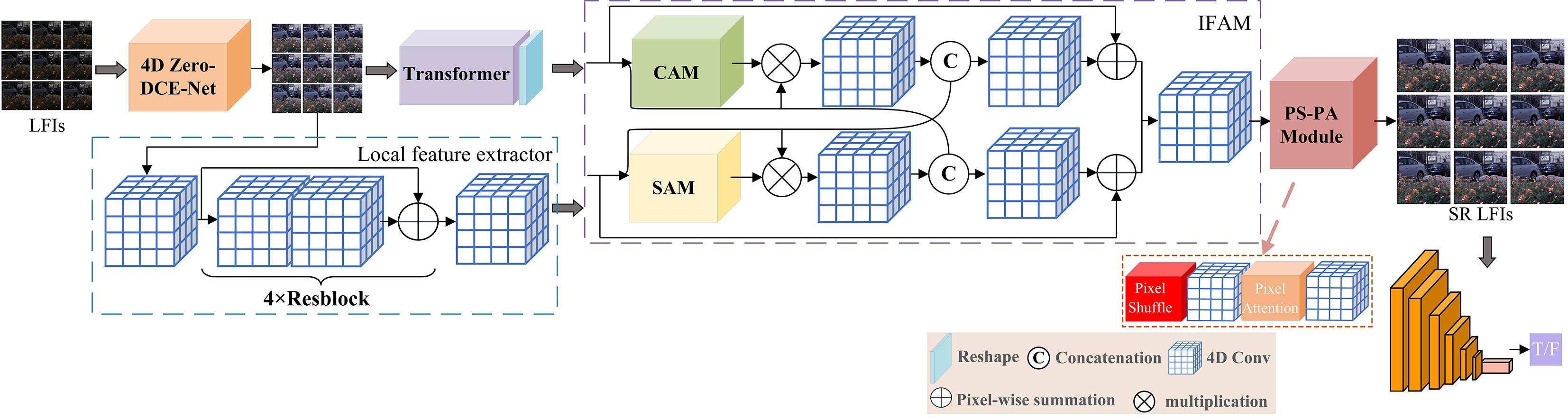

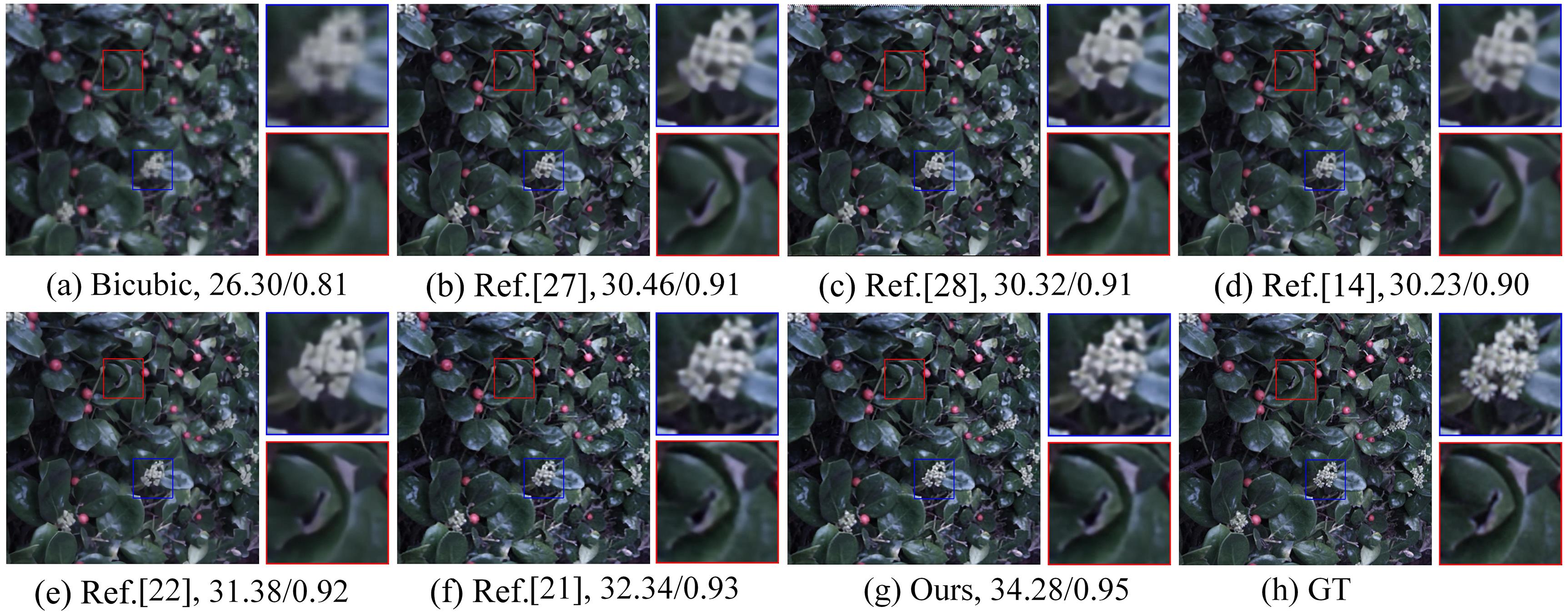

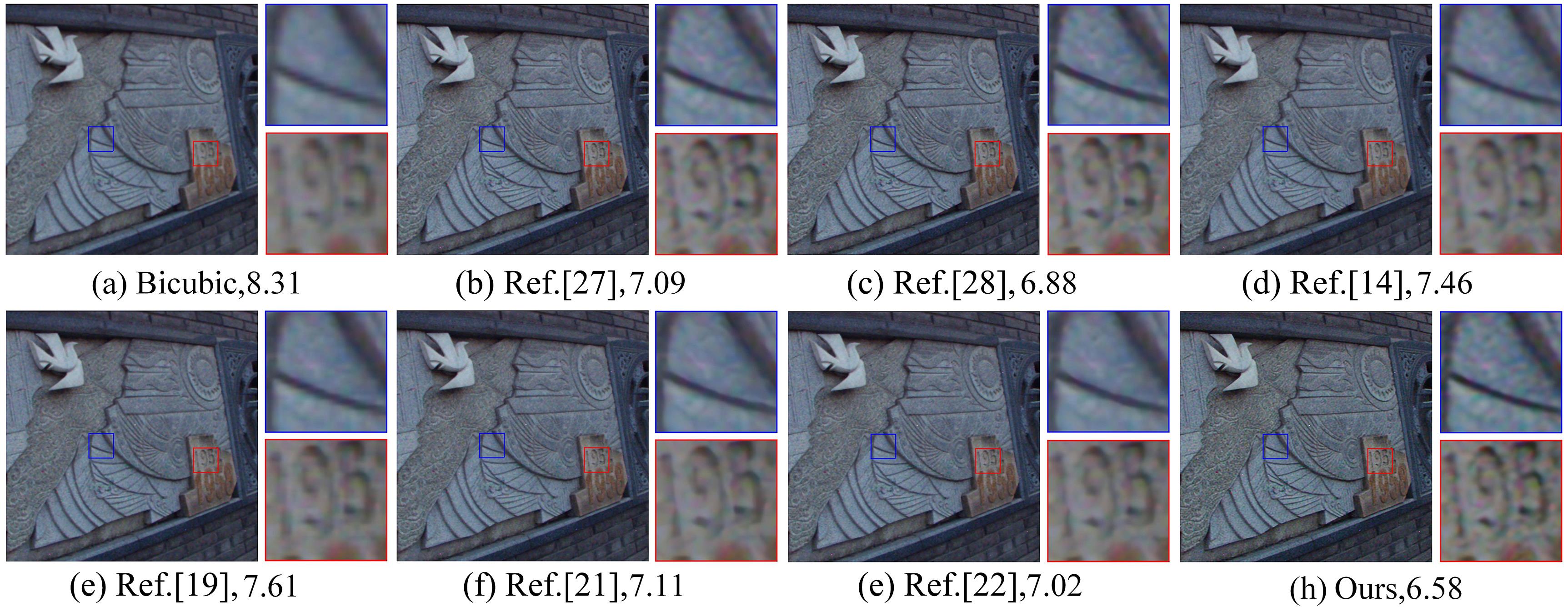

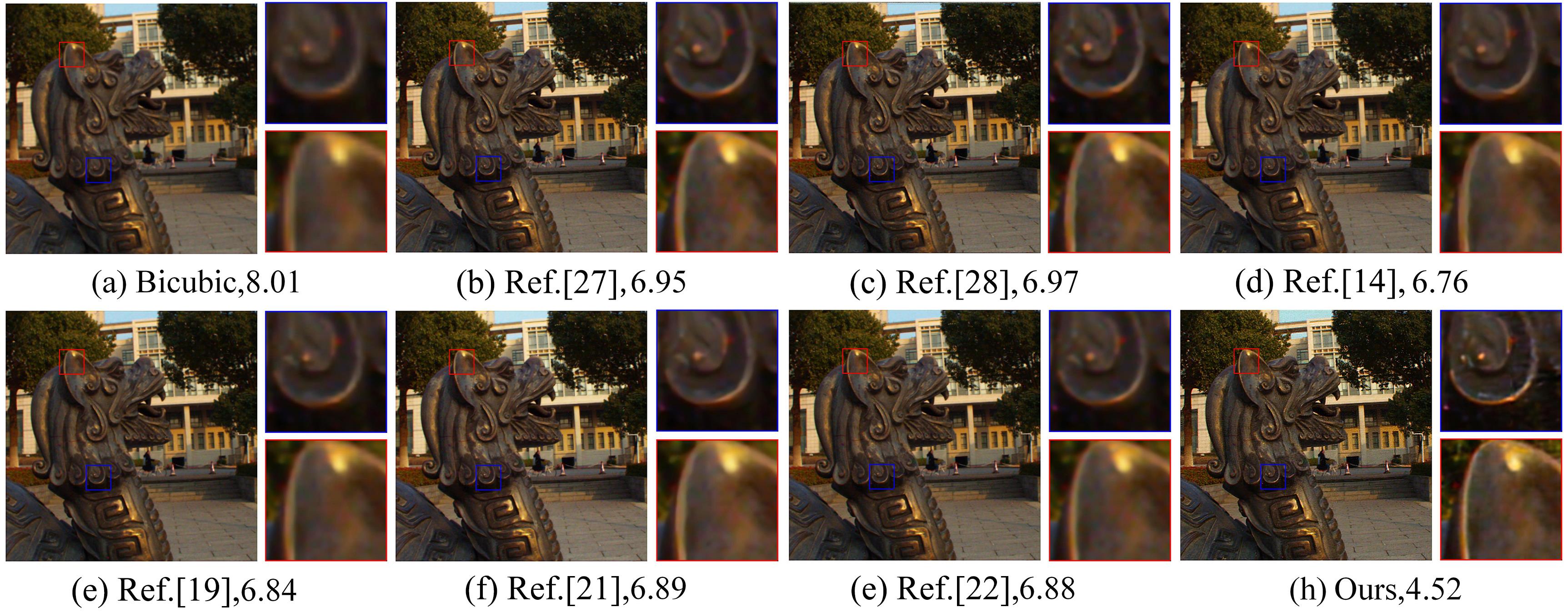

光场相机传感器有限的空间分辨率阻碍了光场图像处理相关研究的进展.提出一种融合全局和局部特征的光场图像空间超分辨率算法,提高了对光场子视点全局关系建模的能力.由于光场相机捕捉的图像亮度较低,严重影响了超分辨率图像的质量,提出一个改进的4D零参考深度曲线估计网络(4D Zero?DCE?Net),充分利用光场全部子视点信息来提高光场图像的亮度.为了解决光场图像空间分辨率低的问题,提出一个基于生成对抗网络的光场图像空间超分辨率网络模型.生成器包含三个部分:第一部分是Transformer和4D卷积以并行方式结合的网络结构,能以较浅的网络层捕捉图像的全局和局部细节信息;第二部分是一个交互融合注意力模块IFAM (Interactive Fusion Attention Module),能有效地融合上述两个分支得到的全局自注意力和局部细节信息;第三部分是一个重建模块PS?PA (Pixel Shuffle?Pixel Attention),能提高整个光场的空间分辨率.最后,利用相对判别器来指导生成器的训练.实验结果表明,提出的算法和其他算法相比,峰值信号比(PSNR)至少提升了1 dB.

中图分类号:

- TP391

| 1 | Fiss J, Curless B, Szeliski R. Refocusing plenoptic images using depth?adaptive splatting∥2014 IEEE International Conference on Computational Photography. Santa Clara,CA,USA:IEEE,2014:1-9. |

| 2 | Zhu H, Wang Q, Yu J Y. Occlusion?model guided antiocclusion depth estimation in light field. IEEE Journal of Selected Topics in Signal Processing,2017,11(7):965-978. |

| 3 | Kim C, Zimmer H, Pritch Y,et al. Scene reconstruction from high spatio?angular resolution light fields. ACM Transactions on Graphics,2013,32(4):73. |

| 4 | Zhu H, Zhang Q, Wang Q. 4D light field superpixel and segmentation∥2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu,HI,USA:IEEE,2017:6709-6717. |

| 5 | Si L P, Wang Q. Dense depth?map estimation and geometry inference from light fields via global optimization∥Proceedings of the 13th Asian Conference on Computer Vision. Springer Berlin Heidelberg,2016:83-98. |

| 6 | Huang F C, Luebke D, Wetzstein G. The light field stereoscope∥ACM SIGGRAPH 2015 Emerging Technologies. Los Angeles,CA,USA:ACM,2015:Article No.24. |

| 7 | Yu J Y. A light?field journey to virtual reality. IEEE MultiMedia,2017,24(2):104-112. |

| 8 | Srinivasan P P, Ng R, Ramamoorthi R. Light field blind motion deblurring∥2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu,HI,USA:IEEE,2017:2354-2362. |

| 9 | Ding Y Y, Li M Y, Yan T,et al. Rain streak removal from light field images. IEEE Transactions on Circuits and Systems for Video Technology,2022,32(2):467-482. |

| 10 | Wanner S, Goldluecke B. Spatial and angular variational super?resolution of 4D light fields∥European Proceedings of the 12th Conference on Computer Vision. Springer Berlin Heidelberg,2012:608-621. |

| 11 | Wanner S, Goldluecke B. Variational light field analysis for disparity estimation and super?resolution. IEEE Transactions on Pattern Analysis and Machine Intelligence,2014,36(3):606-619. |

| 12 | Mitra K, Veeraraghavan A. Light field denoising,light field superresolution and stereo camera based refocussing using a GMM light field patch prior∥2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops. Providence,RI,USA:IEEE,2012:22-28. |

| 13 | Cho D, Lee M, Kim S,et al. Modeling the calibration pipeline of the Lytro camera for high quality light?field image reconstruction∥2013 IEEE International Conference on Computer Vision. Sydney,Australia:IEEE,2013:3280-3287. |

| 14 | Rossi M, Frossard P. Geometry?consistent light field super?resolution via graph?based regularization. IEEE Transactions on Image Processing,2018,27(9):4207-4218. |

| 15 | Yoon Y, Jeon H G, Yoo D,et al. Learning a deep convolutional network for light?field image super?resolution∥2015 IEEE International Conference on Computer Vision Workshop. Santiago,Chile:IEEE,2015:57-65. |

| 16 | Yoon Y, Jeon H G, Yoo D,et al. Light?field image super?resolution using convolutional neural network. IEEE Signal Processing Letters,2017,24(6):848-852. |

| 17 | Wang Y L, Liu F, Zhang K B,et al. LFNet:A novel bidirectional recurrent convolutional neural network for light?field image super?resolution. IEEE Transactions on Image Processing,2018,27(9):4274-4286. |

| 18 | Zhang S, Lin Y F, Sheng H. Residual networks for light field image super?resolution∥2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach,CA,USA:IEEE,2019:11038-11047. |

| 19 | Yeung H W F, Hou J H, Chen X M,et al. Light field spatial super?resolution using deep efficient spatial?angular separable convolution. IEEE Transactions on Image Processing,2019,28(5):2319-2330. |

| 20 | Meng N, So H K H, Sun X,et al. High?dimensional dense residual convolutional neural network for light field reconstruction. IEEE Transactions on Pattern Analysis and Machine Intelligence,2021,43(3):873-886. |

| 21 | Wang Y Q, Wang L G, Yang J G,et al. Spatial?angular interaction for light field image super?resolution∥Proceedings of the 16th European Conference on Computer Vision. Springer Berlin Heidelberg,2020:290-308. |

| 22 | Jin J, Hou J H, Chen J,et al. Light field spatial super?resolution via deep combinatorial geometry embedding and structural consistency regularization∥2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle,WA,USA:IEEE,2020:2257-2266. |

| 23 | Guo C L, Li C Y, Guo J C,et al. Zero?reference deep curve estimation for low?light image enhancement∥2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle,WA,USA:IEEE,2020:1777-1786. |

| 24 | Jiang Y F, Gong X Y, Liu D,et al. EnlightenGAN:Deep light enhancement without paired supervision. IEEE Transactions on Image Processing,2021(30):2340-2349. |

| 25 | Bertasius G, Wang H, Torresani L. Is space?time attention all you need for video understanding?. 2021,arXiv:. |

| 26 | . |

| 27 | Dai T, Cai J R, Zhang Y B,et al. Second?order attention network for single image super?resolution∥2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Long Beach,CA,USA:IEEE,2019:11057-11066. |

| 28 | Jo Y, Oh S W, Kang J,et al. Deep video super?resolution network using dynamic upsampling filters without explicit motion compensation∥2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City,UT,USA:IEEE,2018:3224-3232. |

| 29 | Vasu S, Madam N T, Rajagopalan A N. Analyzing perception?distortion tradeoff using enhanced perceptual super?resolution network∥European Conference on Computer Vision. Springer Berlin Heidelberg,2018:114-131. |

| 30 | Mittal A, Moorthy A K, Bovik A C. No?reference image quality assessment in the spatial domain. IEEE Transactions on Image Processing,2012,21(12):4695-4708. |

| [1] | 黄炜钦, 高凤强, 陈俊仁, 李婵. 联合深度置信网络与邻域回归的超分辨率算法[J]. 南京大学学报(自然科学版), 2019, 55(4): 581-591. |

| [2] | 罗鸣威2,王怀登1*,丁 尧1,袁 杰2. 变焦序列图像超分辨率重建算法研究[J]. 南京大学学报(自然科学版), 2017, 53(1): 165-. |

|

||